[CA5] Large and Fast; Exploiting Memory Hierarchy

- Introduction

- DRAM Organization & Operation

- Memory Hierarchy: Principle of Locality

- Caches

- Cache Block Size

- Main Memory & Caches

- Write Policies

- Cache Performance

- Associative Caches

- Multilevel Caches

- Cache’s Interaction with Advanced CPUs and Software

Introduction

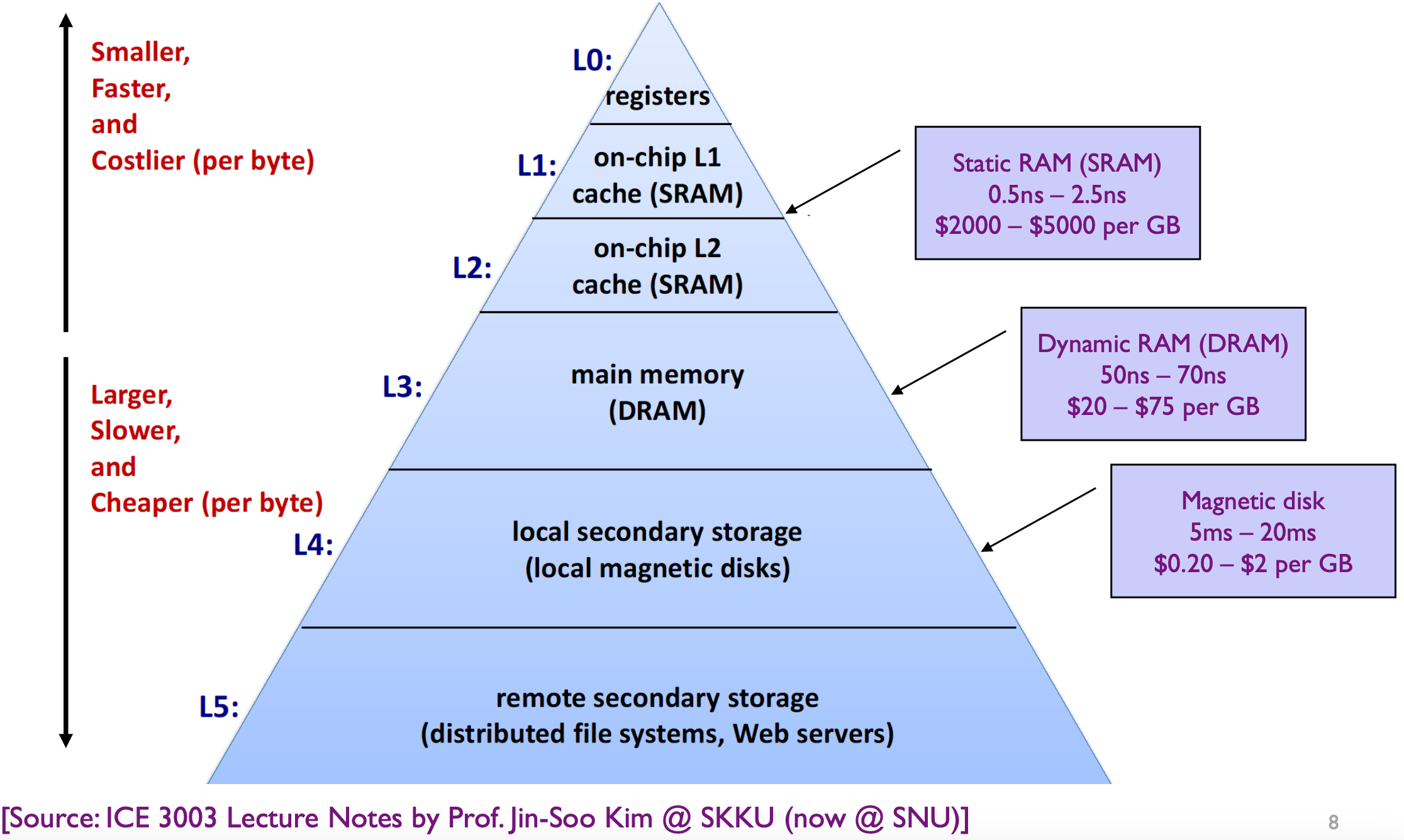

memory hierarchy : A structure that uses multiple levels of memories. ; as the distance from the processor increases, the size of the memories and the access time both increase while the cost per bit decreases.

DRAM Organization & Operation

DRAM configuration

- strobe: A signal used to time reading that is output together with the data.

- Block(line): Unit of data presented in caches.

Note: CPU register ‘line’ == word (4B)

- Large capacity

- Arranged as 2D matrix

- Narrow data interface: 1-16 bits (x1, x4, x8, x16)

- Cheap packages => Few bus pins.

- Pins are expensive.

- Narrow address interface

- Multiplexed address strobe lines: row and column address

- Signaled by RAS# and CAS#, respectively. (RAS: Row Address Strobe, CAS: Column Address Strobe)

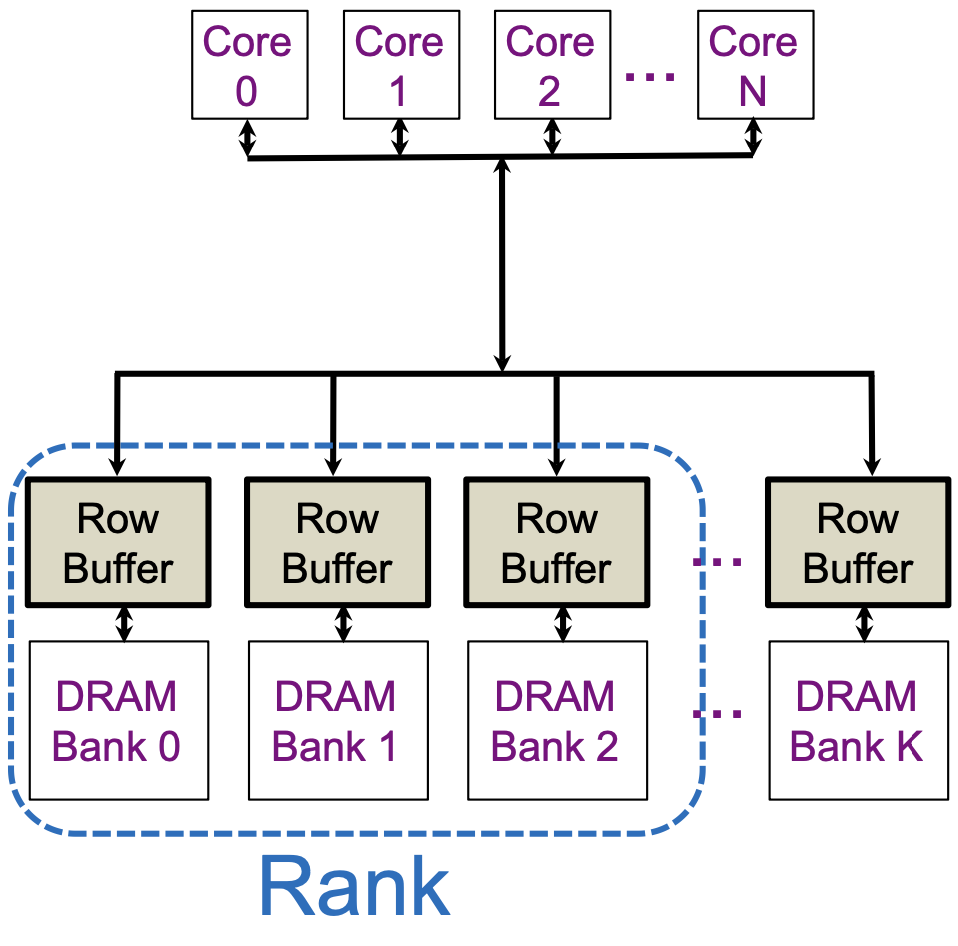

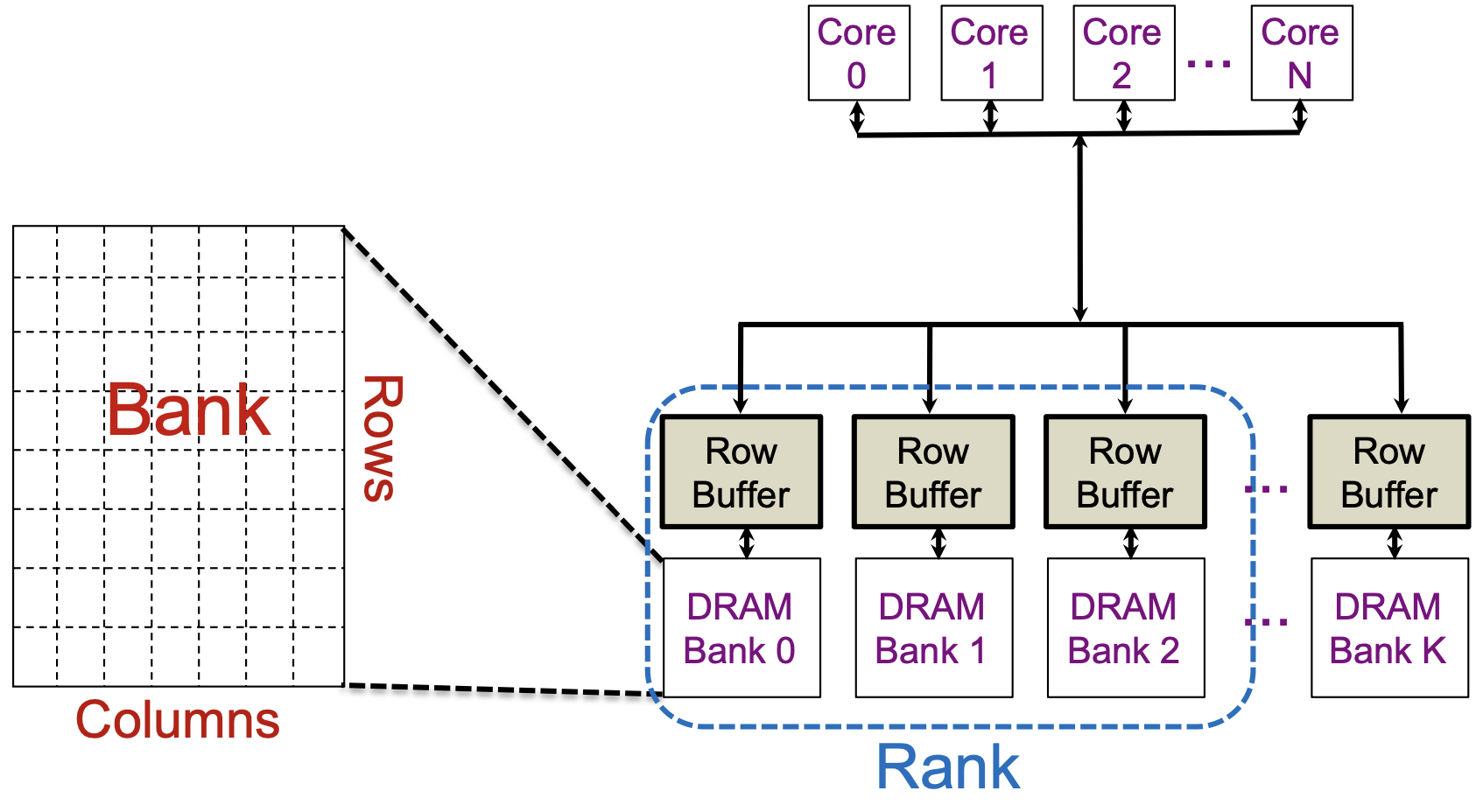

DRAM Organization

- DRAM: set of banks

- bank: independent memory request processing.

- # banks == parallelism degree.

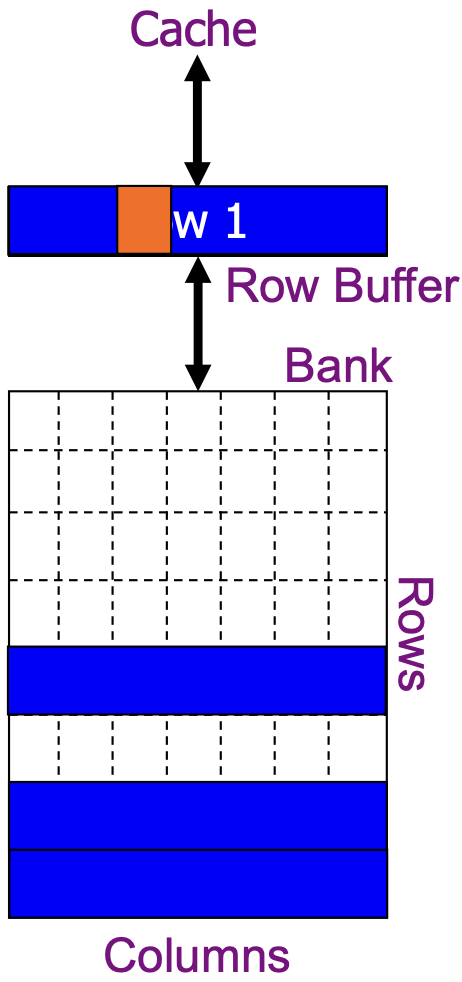

- Row buffer: bank-private cache area. (=> faster than DRAMs)

- 4-16kB in size

- Shared by all CPU cores

- (cf. Rank = 4-16 banks)

“각 bank에 동시에 access가 가능하면, spreading sequential data @ multiple banks is better.”

DRAM Operations

(In? Column-first scheduling)

1) row-hit access 2) row-miss access

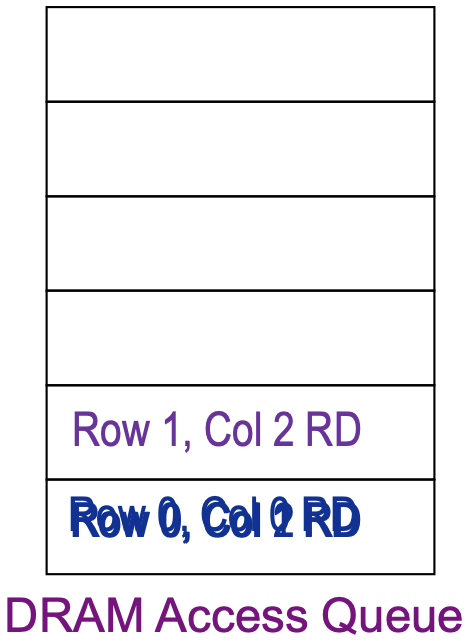

DRAM access scenario

- Three column reads to row 0, one col read to row 1.

Access scheduling sequence

| # | RAS#? | CAS#? | Result |

|---|---|---|---|

| 1 | 0 | 0 | row-miss |

| 2 | 0 | 1 | row-hit |

| 3 | 1 | 2 | row-miss |

| 4 | 1 | 2 | row-miss |

Explanation

-

- Row miss: ACT, row activation

- We should have one miss at the initial data accesses.

- Right after the miss, the corresponding data portion is filled into the buffer.

- Here, we accessed the data of row 1 of the DRAM data for the first time.

- Row miss: ACT, row activation

-

- Row hit: We already had an ACT in access to row0 col0. Thus, the row buffer stores the Row0, which then we can use to access it later.

-

- Row miss: ACT, because we haven’t loaded the Row1’s data into the buffer.

- Then we fill ? Buffer

- Row miss: ACT, because we haven’t loaded the Row1’s data into the buffer.

-

- Row miss: “ACT”. Why?? (probably because the tag bit differs!)

In case of row-miss, the row buffer gets the data from the DRAM.

Memory Hierarchy: Principle of Locality

(If a data location is referenced,)

terms:

-

- temporal locality

- The principle stating that if a location is referenced then it will tend to be referenced again soon.

-

- spatial locality

- The locality principle stating that if a data location is

Temporal Locality

Recently accessed items are likely to be accessed again soon.

e.g. looping variables (induction variables), instructions in a loop

Spatial Locality

Items near recently accessed one are likely to be referenced/accessed soon.

e.g. sequential instruction accesses (e.g. 4 bytes array), array data

Taking advantage of this,

- Store everything on disk

- Copy recently accessed (and nearby - spatial locality) items from disk to smaller DRAM memory

- main memory

- Copy more recently accessed (and nearby) items from DRAM to smaller SRAM memory (- temporal locality?)

- Cache memory attached to CPU

- memory hierarchy

Access Hits and Misses

- Block(line): Unit of data presented in caches.

- Hit: If accessed data is present in upper level. (Memory accesses found in a level of the memory hierarchy.)

- Hit ratio: hits/accesss.

- Miss: If accessed data is absent in the upper level. (Memory accesses not found in a level of the memory hierarchy.)

- => Copy the block from the lower level

- (=> Regenerate the access by copying it to the upper level.(?))

- Time taken: miss penalty: access to lower + access to upper

- Miss ratio: misses/accesses

- = 1 - hit ratio

- => Copy the block from the lower level

- Then accessed data supplied from upper level

Caches

Cache memory: The level of the memory hierarchy closest to the CPU (except for the register(?))

Given accesses, we have 2 Questions:

- Where do we look?

- How do we know the presense of the data?

Cache organization

- memory hierarchy

스스로 정리한 내용 + Tag array + Data Array

- Address composition (of fields)

- tag

- (cache) index

- block offset

- word-byte offset.

###

.png)